Service Mesh: Microservices Architecture

INFRASTRUCTURE, TECHNOLOGY OF EXPERIENCE.

We updated this article in 2022 to include some of the most important service developments and assess the current situation regarding the adoption of this practice.

What is Service Mesh?

Service Mesh is an architectural practice for managing and visualizing sets of multiple container-based microservices.

In general terms, a service mesh can be considered as a software infrastructure dedicated to handling communication between microservices. It provides and enables container-based applications and microservices, which are integrated directly from within the cluster.

A service mesh provides service monitoring, scalability, and high availability, through a service API, rather than forcing its implementation on every microservice. The benefit is in reducing the operational complexity associated with modern microservice applications.

Data Plane

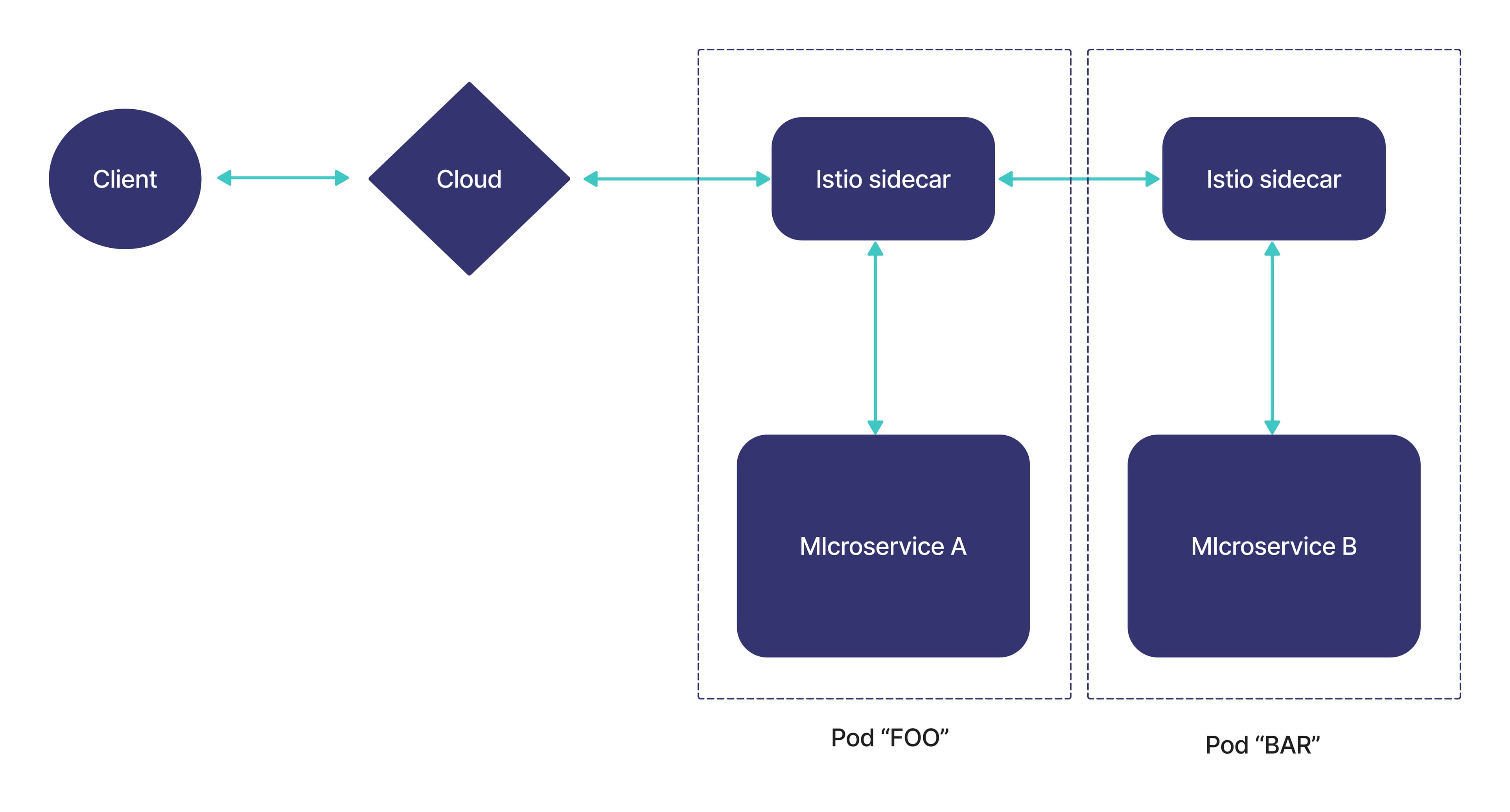

Many Service Mesh implementations use a proxy-sidecar to intercept and manage all inbound and outbound traffic to a particular service instance.

The data plane in a service mesh refers to the proxy-sidecar that is deployed along with each service instance, so that it can communicate with the other services in the system.

The proxy-sidecar is the data plane itself, being responsible for translating, forwarding and conditionally observing every network packet flowing to and from a service instance. This is in addition to routing, security, service discovery, observability and load balancing.

Control Plane

The control plane provides the global configuration of the functionalities executed by all the existing data planes in the Service Mesh, turning this network of data planes into a distributed system.

It is in charge of managing and monitoring all the proxy-sidecar instances, being used to implement control policies, metrics collection, monitoring, etc.

Deployment Patterns

There are two deployment patterns:

Based on one proxy per host. One proxy is deployed per host, with multiple instances of application services running on the same host, all routing traffic through the same proxy instance.

Proxy-sidecar based: One proxy-sidecar is deployed per instance of each service. This model is very useful for deployments using containers or Kubernetes.

How does Service Mesh work?

When having microservices architectures, benefits such as autonomy, flexibility and modularity are sought. However, the challenge of containerizing a large monolith arises where visibility and governance must be offered with an increasingly large ecosystem of small services.

How does a service mesh abstract this complexity into each microservice? To understand this, let's review the architecture pattern known as sidecar.

With a sidecar pattern, a special small container is created that runs next to each microservice. The name comes from sidecars, the small capsules or saddles that are built into the side of motorcycles. Sidecar is not the only architecture pattern as there are different microservice architecture patterns.

The sidecar container acts as a proxy, and implements common functionalities such as proxying, authentication, monitoring, etc., leaving the microservices free to focus on their specific functionality. A central controller (control plane) organizes the connections, and directs the traffic flow between the proxies and the control plane, collecting performance metrics.

There are different ways to implement the service mesh.

It mainly depends on where the shared functionality exists between the microservices:

Per-node service proxy: Each node in the cluster has its own proxy service container.

Per-application service proxy: Each application has its own proxy service and its access to instances of the proxy application.

Discrete devices: A set of discrete devices re-route service chaining traffic. This happens through manual configuration or with a set of adapters and plugins required for service automation.

Each instance of the service proxy application: Each of the instances has its own "sidecar" proxy.

![[Banner]ebook #1](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fc63hsprlvlya%2F6Haoxaq9fMWbWcJa6vXuLP%2F432f1ce04730c61e4be0eaed82d3b85e%2FBanner_E-BOOK_ENG__1_.jpg&w=3840&q=75)

Why is Service Mesh a good fit for microservices architecture?

Microservices require a common set of functionalities such as authentication, security policies, protection against intruders and denial of service (DDoS) attacks, load balancing, routing, monitoring and fault management.

In a monolithic application these requirements are implemented only once, but in environments with tens or hundreds of containers it is not practical to repeat it in each one. If these functionalities must be implemented and repeated in multiple microservices, we have the additional burden of doing it in different programming languages (if applicable) and by different teams.

Thus the need for a more effective architecture arises. One solution to this problem is to create a Service Mesh to provide integrated services within the cluster. The different tiers of services that are maintained across environments and contain applications and microservices can be leveraged as needed.

What problems does Service Mesh solve?

Traffic Management

Good traffic management increases performance and enables a better deployment strategy. Service Mesh rules for traffic routing allow you to easily control traffic flow and API calls between services.

The service mesh simplifies the configuration of service level properties such as timeouts, circuit breakers, retry requests, and traffic splitting/switching, making it easy to configure important tasks.

Observability

As services become more complex, understanding their behavior and performance becomes a challenge. Service Mesh generates detailed telemetry that allows for complete visibility into all communications within the service network, such as incorporating success rates, latencies, request volume for each service, etc.

With this telemetry you can observe the behavior of the monitored service, allowing operators to troubleshoot potential problems. In addition, you can maintain and optimize your applications by gaining a deeper understanding of how services interact.

Security Capabilities

Microservices have specific security needs, such as protection against third-party attacks, flexible access controls, mutual Transport Layer Security (TLS) and auditing tools.

Service Mesh offers comprehensive security that gives operators the ability to address all of these issues. It also provides strong identity, transparent TLS encryption, and authentication, authorization, and auditing tools to protect services and data.

What is Istio? - Istio Service Mesh

Istio is an open source product that implements a Service Mesh, offering in a simple and flexible way the automation of the network functions of an application, independent of any language.

Gloo Mesh

Gloo Mesh is an Istio-based Service Mesh and control plane that simplifies and unifies the configuration, operation and visibility of service-to-service connectivity within distributed applications.

Gloo Edge

It's a feature-rich, native Kubernetes input driver, and it's also a next-generation API Gateway. It is exceptional at:

Function-level routing.

Support for legacy applications and microservices.

Discovery capabilities.

Tight integration with major open source projects.

Gloo Edge is uniquely designed to support hybrid applications, where multiple architectures, technologies, clouds and protocols can co-exist.

Gloo Modules

GraphQL: It is a query language for APIs, to perform queries from user interfaces. It is very flexible and powerful for extracting data from microservices, allowing to obtain declarative data, where the client specifies what data it needs from an API and the underlying services.

Gloo Gateway: Provides a native Istio API Gateway and Kubernetes Ingress functionality. It can be deployed as a gateway with a layered or virtual configuration, depending on the requirements of the network architecture.

Gloo Portal: It is an API developer portal, native to Kubernetes and built to run natively with Istio. It reduces complexity and allows developers to publish, document, share, discover, and use APIs with rich controls, comprehensive security, and detailed configuration information.

Istio vs Linkerd

Istio is complex because it is designed to run on top of an Envoy proxy server, which has a reputation for being difficult to use. On the other hand, Linkerd is limited to handling Kubernetes and container environments, where other deployment types are not as tightly integrated as with Istio.

This complexity gives Istio capabilities that Linkerd doesn't have, such as virtual machines, containers, and legacy software, as well as controlling traffic from a single control plane. It's a good choice for enterprises running complex infrastructure, but it's too much for smaller Kubernetes-based deployments.

Service Mesh, microservices, middleware and API gateways

Doesn't Servcice Mesh do what microservices do?

A microservices architecture allows changes to be made to an application's services without completely redeploying them. Microservices communicate with each other and can fail individually without disrupting the entire application.

What makes microservices possible is service-to-service communication. The logic that governs this communication can be hard-coded into each service without a Service Mesh, but as the communication becomes more complex, the Service Mesh becomes more valuable.

How does Service Mesh differ from ESB / middleware?

A big difference is that Service Mesh focuses on operational logic and not on business logic, while ESB (Enterprise Service Bus) is based on business logic, which is what caused its downfall.

How does Service Mesh differ from API gateways?

They differ in that API gateways handle all the revenue stuff, but not the issues within the cluster. Also, they often behave with a certain amount of business logic, e.g. "User A can only make 25 requests per day".

Main advantages of Service Mesh

Enables the ability for small businesses to create highly scalable architectures and functions.

Accelerates application development, testing and deployment.

Applications are updated faster and more efficiently.

An agile non-aggregated data layer of proxies placed next to the cluster container can be very useful and efficient when managing network services.

Greater freedom in creating innovative applications with container-based environments.

Set of infrastructure services required for any application: load balancing, traffic management, routing, monitoring, application control, configuration and security features, etc.

Main disadvantages of Service Mesh

Many of the initial service issues or growing pains have been resolved at this point. However, we can keep the following considerations in mind:

Runtime instances are augmented by using Service Mesh.

Each service call must first run through the proxy-sidecar, adding an additional step.

Service Mesh does not address integration with other services or systems, and the type of routing or transformation mapping.

Although the complexity of network administration is reduced and centralized, it is not eliminated. Therefore, someone must integrate the Service Mesh into workflows and manage its configuration.

You may not need a service mesh architecture until you have a complex topology where an operation involves several microservices calling each other.

We recommend exploring techniques such as canary deployments, progressive updates such as rolling updates, monitoring using APM (application performance monitoring) and distributed logging or tracking. Additionally, take advantage of the auto-scaling and health check features of platforms such as Kubernetes and OpenShift.

What is the future of Service Mesh? Should I be using a Service Mesh in 2022?

The frenetic pace at which enterprises have been adopting Service Mesh shows little sign of slowing down. Like what has happened with very successful technologies, the future of Service Mesh is likely to be that it will take a back seat, where it is taken for granted, without much attention being paid to it.

The future of Service Mesh is not so much in how the technology as such is advancing, but rather in the things it unlocks. The question is what kind of new things can be done after implementing Service Mesh, which in this case opens up many possibilities.

If your organization is interested in implementing microservices or containerization strategies from experts, we invite you to contact us.